On December 15, Facebook announced its response to the “fake news” dilemma that has dominated the post-election news cycle. Showcasing a severe lack of responsibility and foresight, Facebook’s designers on News Feed have put forward a design scheme with dangerous implications.

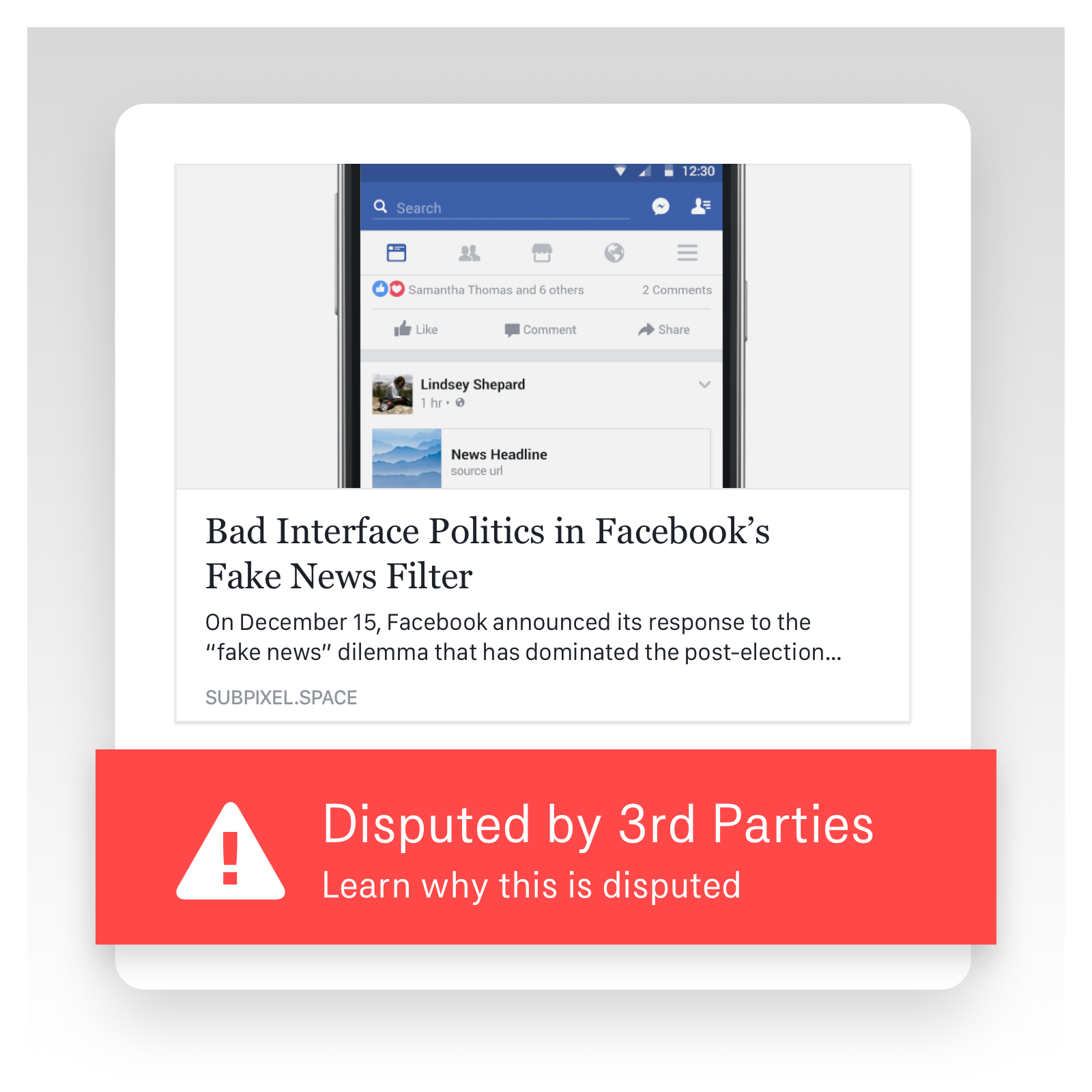

The crux of Facebook’s plan is the Fake News flag. This flag comes in the form of a bar with a prominent red triangle and exclamation point—an internationally recognized symbol for “warning”—and the message “Disputed by 3rd Party Fact-Checkers.” How does this bar come to appear beneath articles? How are articles disputed? How does an article’s designation as “fake news” affect its visibility on the news feed? By critically examining these design decisions made by Facebook, we are able to question the outcomes of those same decisions.

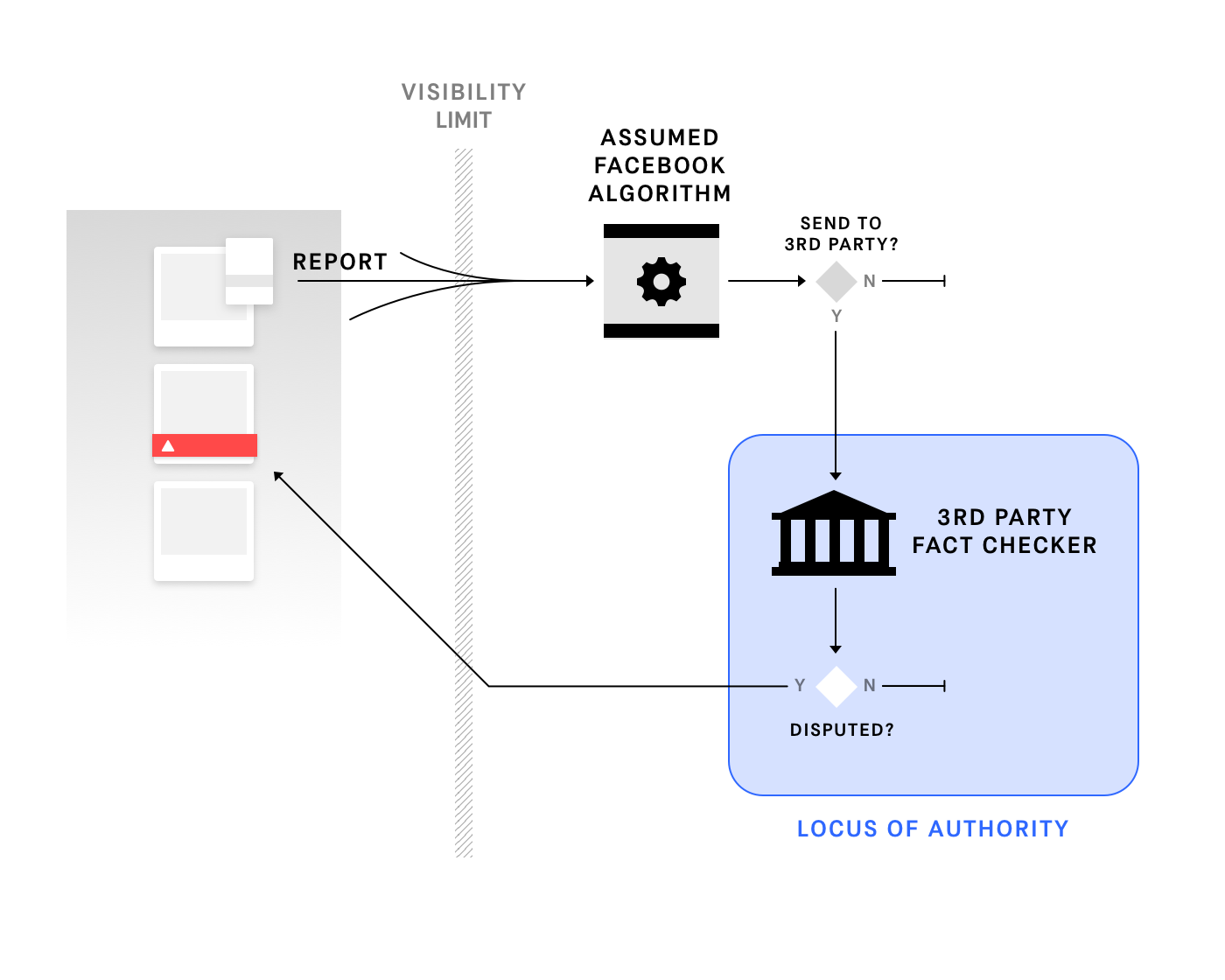

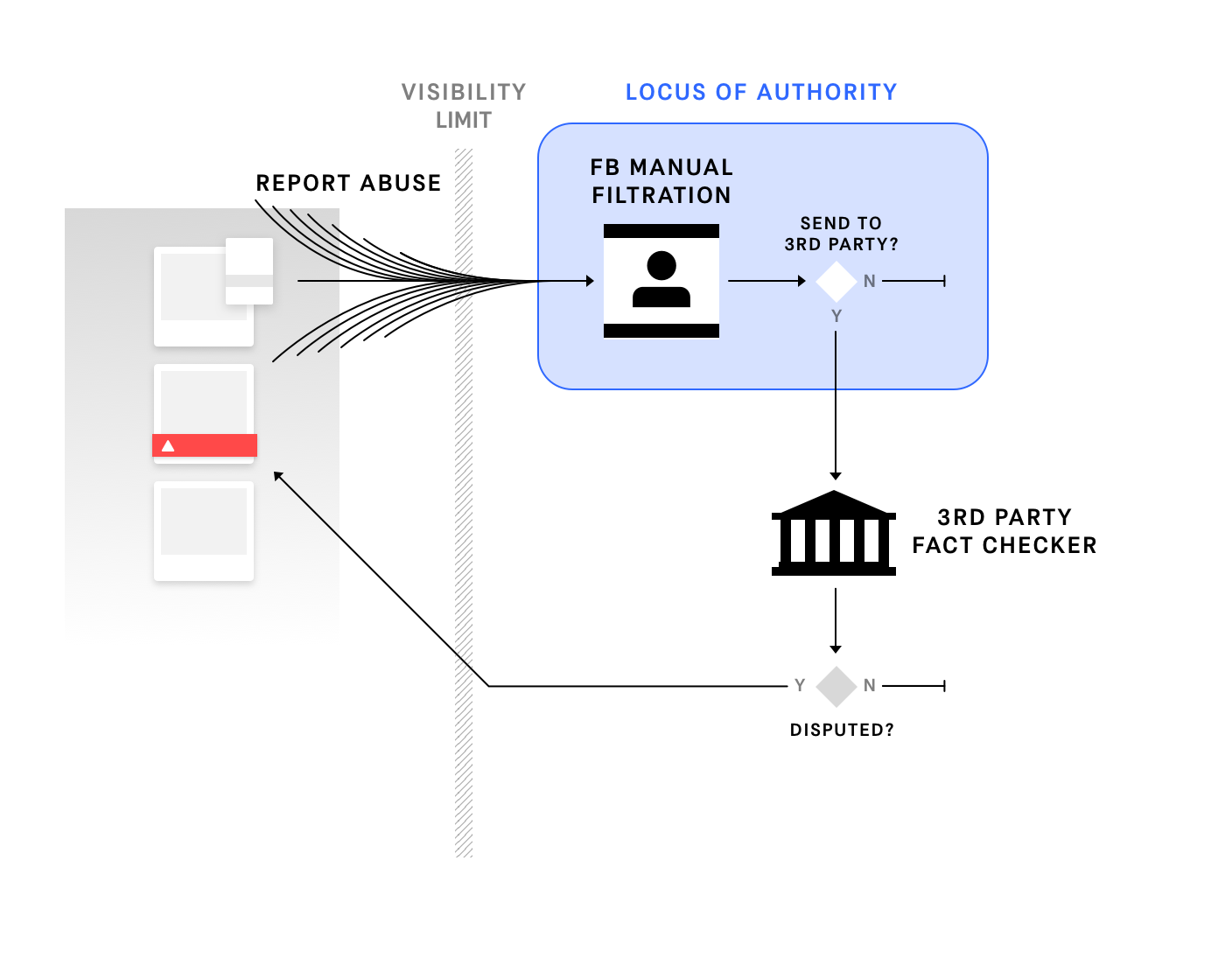

Let’s first take a look at the process and mechanics around reporting and the fake news flag.

Process

-

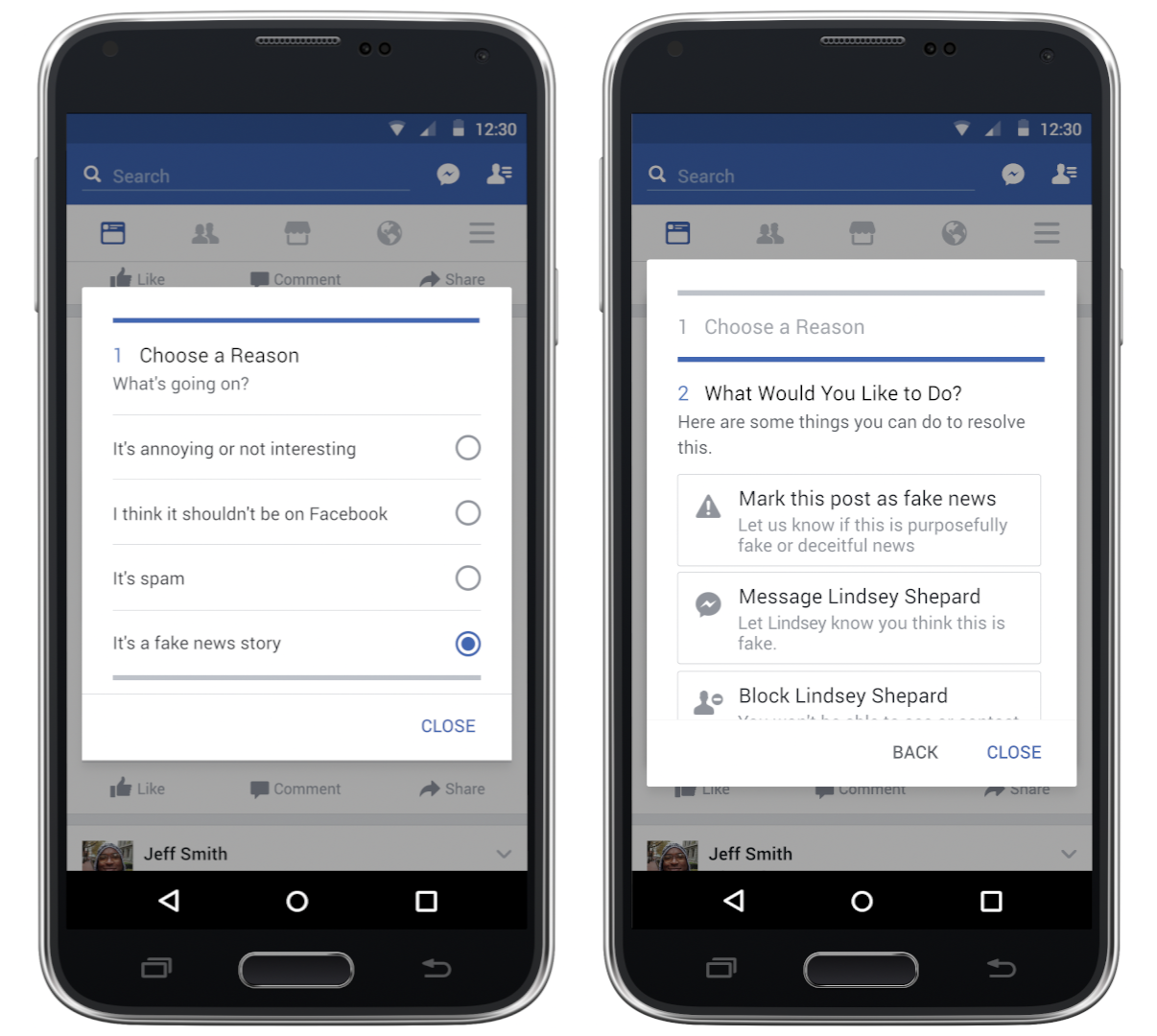

Links to external articles can be reported by anyone with the familiar Report Post menu. Facebook explains that it will rely heavily on its user community to identify and report fake news and hoaxes. This is the extent of transparency of the reporting and fact-checking process.

-

After being reported fake by some number of people—“along with other signals” (Facebook’s verbiage)—the article is sent to third party fact checking organizations.

-

If identified as fake by these third party sources, the story is designated as false inline with the article.

-

False stories are also ranked down in the news feed.

The fact check stage is the pivotal point of this entire process. Third parties serve two important roles in Facebook’s design plan: to be the actual arbiter of truth, and to be the locus of perceived responsibility for truthhood (removing that responsibility from Facebook itself). The UX patterns are well structured to achieve these goals: the process efficiently shuttles suspicious articles directly from users’ reports to the organizations, then shows results inline with disputed articles.

But what if we push the constraints of this design plan?

Abuse of Crowd-Sourced Falsification Reinforces the “Filter Bubble”

The process set out by Facebook is altered dramatically if users report an extraordinary large number of articles. Why would they do that, and how might this happen? To answer these questions, it’s necessary to understand how “fake news” is being used.

In the weeks immediately following November 9th, “fake news” was used in mainstream-left criticisms of Facebook for its unwitting promotion of conservatively-oriented, factually false share-bait articles. This elicited a reaction from conservative media, who began to deploy it to challenge liberal viewpoints. Now the term “fake news” has spiralled out of control of either political faction, and is being used rampantly to discredit narratives from both sides. It has become weaponized language. The phrase “fake news” positions whoever uses it as the speaker of truth, and without any factual premise, dismisses alternative evidence as baseless. As mere language, extensive use of the term is already damaging to productive political dialogue. But when the ability to wield it is designed directly into Facebook, partisan worldviews are further aggravated. Providing the power to report “fake news” through the interface is like doling out weapons for an upcoming turf war in which each side abuses the feature to repudiate the political narrative of the other.

It is shocking that Facebook’s designers and product leaders pursued this crowd-sourced flagging ability given the well-known history of collective internet action. “Google Bombing” has been a tool of trolls and interest groups since the early 2000s. More recently, we’ve seen how /r/the_donald worked together to upvote new posts, rocketing them to the front page of Reddit. At a time when alt-right factions on 4chan and Reddit are already engaged in collective efforts to game ranking algorithms and spread (dis)information, we must assume that similar tactics can and will be used on Facebook; left-leaning Facebook users may also adopt these very same methods in response. In other words, the crowd-sourced report feature first and foremost enables self-censorship through aggressive partisan collective action. As opposed to the clearly subjective options in the report post menu, “It’s annoying or not interesting” and “I think it shouldn’t be on Facebook,” the “It’s a fake news story” appeals to the user’s political lens and encourages them to make a judgment on objectivity.

To return to our process diagram, let’s examine what happens if people begin to report articles en masse. Firstly, it will no longer be possible to assume that all reported links are potentially false—many will not be. Subsequently, if the number of reports is too overwhelming for Facebook’s fact-checking partners, only a certain number can be forwarded on. This makes algorithmic automation of the process difficult. So who decides what articles are sent to the third party fact-checkers? If Facebook takes on the role of triaging what articles are checked, it becomes the crucial point of filtration, replacing the third parties themselves. However, even if abuse never becomes a problem, Facebook still sits between user reports and fact-checking organizations, determining to a significant degree which reports are sent. Depending on how the program is run, its curations could easily become more or less opinionated. An example of such opinionatedness on Facebook was seen was already seen earlier this year, when the company faced backlash over its heavy-handed curation of Trending Topics. Its curation was found to disproportionately omit conservative news stories from the list of trending items. As in the trending topics case, it is likely that human employees would be used to be make judgments on what articles are sent to fact-checkers, which can only result in an intrinsic partisan perspective.

This gets to the core of the “fake news” problem: there can be no universal filter for truth or for critical thinking. The third parties are intended to provide nonpartisan, unbiased objectivity, but what both the “fake news” debate and this process diagram reveal is that truth is mediated by many different lenses. So Facebook’s crowd-sourced reporting ultimately gets us no closer to a good model of what to think. In fact, it enables the reverse, allowing users to self-reinforce the narratives to which they are already exposed. At worst, it makes them active participants in the literal erasure of other views from their feed of potential perspectives. And it opens the possibility for direct adjudication of the truth by Facebook.

Mediation, Dismissal, and Censorship

When we understand how truth is mediated and contextual, we can examine how Facebook’s fake news filtration teeters on the edge of censorship. As we’ve seen, relying on third parties does not make Facebook an objective part of the falsification process. The discussion above shows one way Facebook intermediates the truth via the selection of articles sent through its reporting process. Further intermediation is present in the fake news flag itself, and in the selection of fact-checking organizations.

The flag directly mediates our view of reality by expressing an opinion on what is true or false. Its ability to do this is not up for contest; it is a baseline assumption for the design of the entire feature. We can understand better how it works, however, by looking at a similar example. The fake news flag functions much the same way as Google’s SSL security warning, which appears before some websites without an SSL certificate. In previous iterations, Google’s warning has achieved compliance rates of up to 30%. That might seem low, but the warning is triggered after a user has already made a decision to visit the link, suggesting that that many users make good on their original intent. Facebook’s fake news flag, on the other hand, alerts viewers before a decision to click through is made. Especially because many articles state the central topic, observation, or argument in the title, the flag directly challenges and delegitimizes the content before it can be engaged. Additional downranking of flagged articles in feed placement affects the number of users that can engage with them at all.

As previous citation of the Trending Topics debacle indicates, Facebook already edits information available to users. While these background filtrations also deserve thought and attention, here we are concerned with how the fake news flag impacts what people think before they engage with a topic. The visual foregrounding of institutional opinion is an entirely new behavior of Facebook that begs discussion on its own terms. But the fake news flag issue does share with Trending Topics a distinct lack of visibility into the process by which curative decisions are made. How many user reports are required to send an article to fact-checkers, and what are the “other signals” used to determine its eligibility? Is this an entirely algorithmic process, or are there indeed humans involved? Finally, how we can even know an article was fact-checked at all? Because of the opaqueness inherent in the process, we can only surmise its workings by taking Facebook’s announcement at face value. So should we trust that it is working at all?

Facebook is a private corporate entity that sometimes disagrees with governments on matters of personal privacy and sometimes colludes with them. When it comes to notions of truth and falsehood, might we expect it to take a similar approach? Additionally, as a publicly traded company, Facebook has a political agenda, which may or may not be visible, but which is embedded in its processes and interfaces. When notions of truth and falsehood have an affect on its agenda, might we expect it to act in accordance with that agenda?

It is impossible to continue without making some contestable political statements. Let us take a momentary step back and survey the landscape. The discourse of “fake news” comes at a time when fact-hood has long been monopolized by institutions popularly understood as politically left-leaning, including science, academia, and mainstream media. Facebook has not indicated which Poynter signatories it intends to work with, but many of the institutions on its list include those typically aligned with what are perceived as liberal interests. Already, some are decrying fact-checking sites as inherently liberal and calling for the addition of conservative sources.

Facebook officially empowers liberal "fact check" sites to mark stories "disputed" and drop them in News Feed.https://t.co/DMxCncIlYw pic.twitter.com/ZBNO1z8d5B

— Phil Kerpen (@kerpen) December 15, 2016

The implementation of these News Feed features is a response to popular pressure, so could the same thing happen again with pressure from conservatives? Under the Trump administration, would Facebook include organizations that are seen to represent conservative political interests to the list of fact-checkers it works with? From over a year of campaign coverage, we’ve seen that the incoming administration feels no need to pay respect to fact. The newly passed Countering Disinformation and Propaganda Act will create a central inter-agency division of the State Department tasked with countering foreign propaganda. A comparable German government institution, just established, has made abundantly clear its intention to force Facebook to go a step further and entirely remove articles that offend its vision of the truth. What if Facebook flags an article that conflicts with a government’s official stance on some topic? Would Facebook challenge that stance’s credibility? Or would it acquiesce de-flagging requests, or even adopt the official stance, flagging and downranking contradictory articles?

Know The Politics of Your Design

If we allow Facebook to express, through its interface, an opinion on matters of truth and falsehood, we intrinsically accept the idea that Facebook is allowed to manipulate our view of the world. Visitors to China often say that Chinese netizens are so acclimatized to similar mechanisms of censorship that they are barely disconcerted when the government forces social media platforms to vanish all evidence of articles, topics, hashtags, and people. This is a future to which we cannot grow accustomed. As the world tumbles into Cold War-like relations, governments, corporations, and increasingly technology platforms all have a stake in mainstream truth. We’re in for a confusing ride, and it’s going to be difficult enough to develop our own critical thinking filters. Expecting Facebook to do it for us is not just a bad idea, but a dangerous one.

Concretely, Facebook designers should promote transparency in the fake news reporting process and expose how decisions are made. A public repository of all reported articles and their metadata would inspire more confidence in the fact-checking process and allow coordinated upvoting activity (and suspect flags) to be easily identified by the public. Key information would include, but not be limited to: how many shares and reshares a link has; the number of reports it had and its report-to-impression ratio; a timeline of reports; how it is weighted according to the prioritization algorithm; and what fact-checkers it is being sent to. Additionally, product thinkers and designers at Facebook should exercise their responsibility to the greater public by promoting open conversation between the company, Facebook users, historians, and privacy experts. As for the public, it falls to us to hold Facebook responsible for its power, and to demand from it this transparency and open dialogue.

Designers in this era have enormous responsibility. We must take into account the cultural context of our users, and the general social climate. We must know historical user behavior on similar platforms. Our design decisions are hypotheses, the immediate and long-term outcomes of which must be thoughtfully modeled, the failure modes of which must be understood. How could these outcomes be mitigated, overwhelmed, overridden, subverted, or controlled? We cannot afford to build tools that mirror exactly our own worldviews and limit them by doing so, and we cannot build tools that allow for the monopolization of belief. I have argued here that Facebook’s News Feed designers and product managers have failed us on both accounts. But it is not too late for designers at Facebook—and elsewhere—to take a principled stand and orient their practices to promote critical thought, or at very least, a diversity of perspectives. Effective political engagement for designers starts with the products we make.